The evolution of code review practices in the world of AI

Code reviews are being redefined by AI, and I think it's taking a good direction. Let's review some of the developments in this area, and tools like CodeRabbit.

The world of software development is abuzz with AI's transformative power, largely focusing on its ability to generate code. However, coding is only a part of the intricate software development process, and while everyone is arguing about vibe coding, or hopping between endlessly new emerging VS Code forks, LLMs are making a very good adoption in few other areas, for example code reviews.

I don’t know about you, but I love code reviews.

The power of code reviews

There are different ways to conduct a code review (mailing lists, pull/merge requests, even the code printouts), but they all serve the same main purposes.

🐛 Help catch bugs early, which can save time and resources later in the development cycle.

✅ Help maintain high code quality and adhere to the code standards, performance considerations, etc.

💬 Code reviews can improve team communication. They create a platform for discussing design decisions and coding standards. This dialogue can lead to better solutions and a more cohesive team.

📚 Promote knowledge sharing among team members. When developers review each other's code, they learn different approaches and techniques, which can enhance their skills.

Many would say that you need a code review process in teams only, but I would argue and say that it’s also helpful when you’re working on a project alone, mainly for the documentation purpose and self-review.

But code reviews are time consuming, reviewing code manually takes hours, slowing down development. As well as there are other challenges. For example different reviewers have different standards, leading to inconsistent feedback. Reviewers may overlook issues due to familiarity with the project. And as teams grow, manual review processes become bottlenecks.

I have a good quote for you about the inconsistencies, which is unfortunately very true very often:

Give a developer a 10-line Pull Request and they'll find 10 issues. Give them a 500-line one and they'll just say, "LGTM" :)

AI-powered code reviews

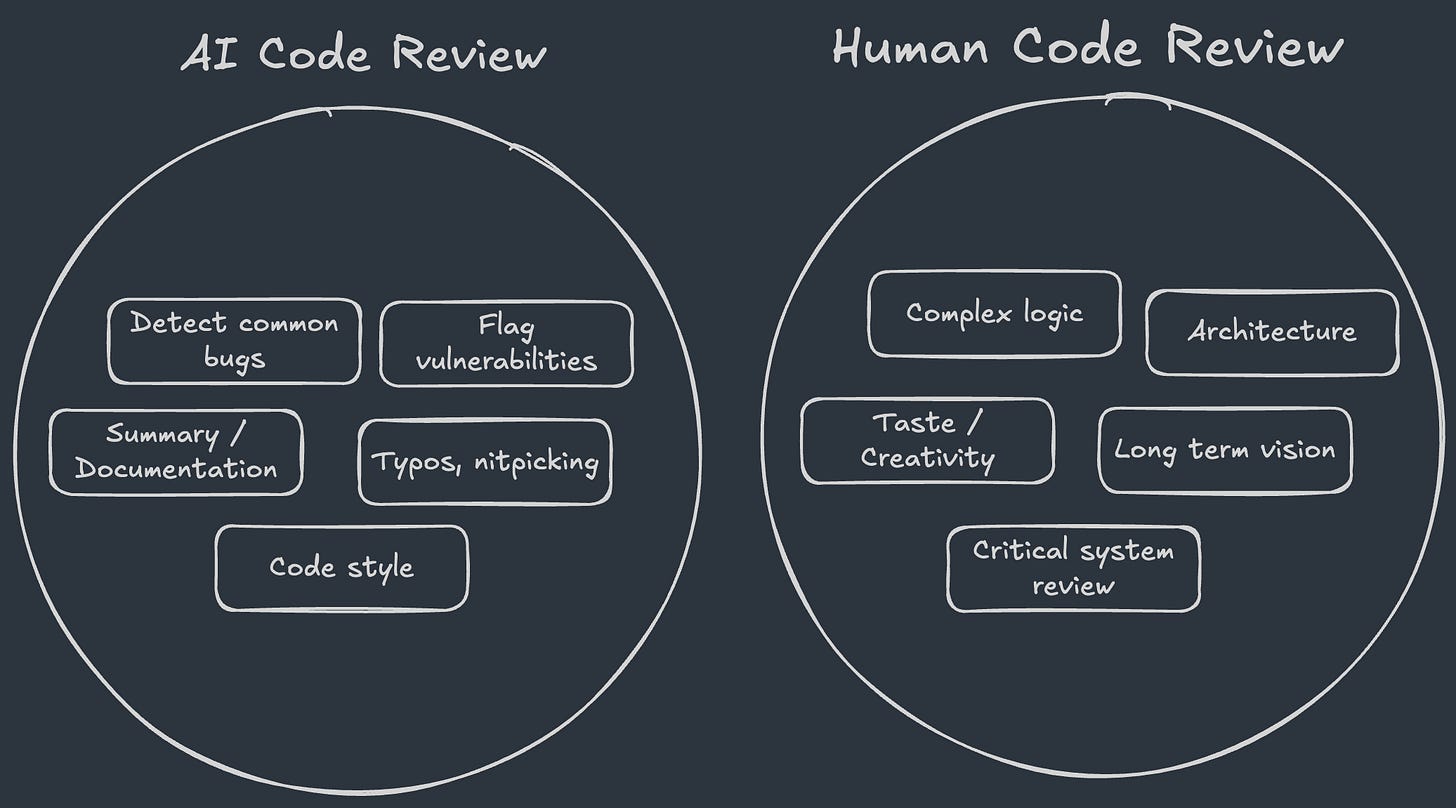

And LLMs can help with these issues quite well, and can be a great companion to software developers. I say “companion“ because LLMs excel in some routine parts, while humans excel in software design, architecture decisions, they have taste and know everything about the software system. And that’s great, let’s outsource routine boring tasks to LLMs to have more time for creativity.

There is probably a world where these 2 circles intersect a bit, as there is no black and white.

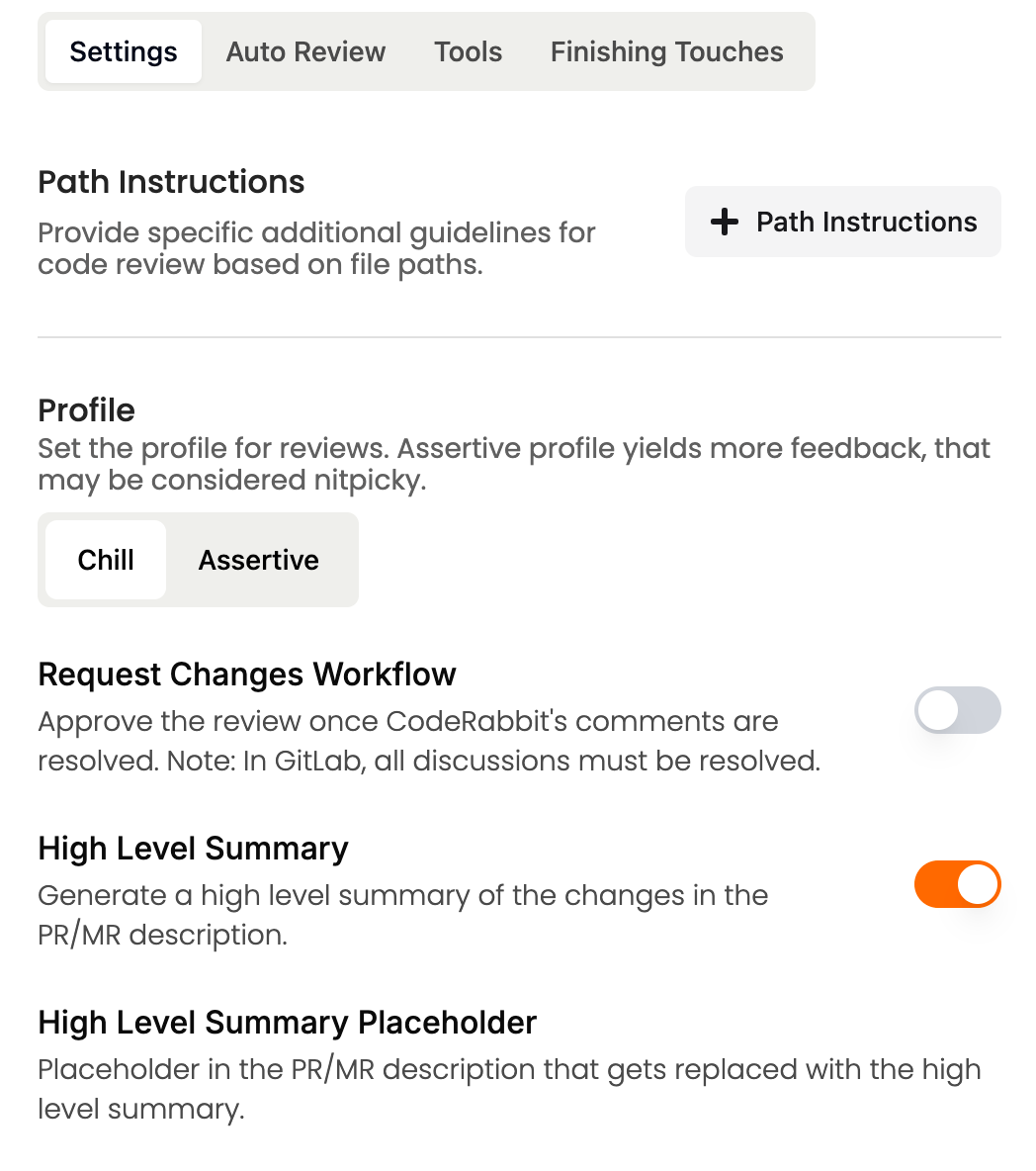

Let’s move closer to the tools, it’s extremely easy to integrate such solutions into an existing system and workflows, as they don’t require teams to radically change the way they work. So far I used only GitHub Copilot and CodeRabbit, and both were really easy to setup in the GitHub. But I would say that I find CodeRabbit settings more advanced, as you can fine tune everything on per-repository level.

After the integration is ready, these tools start automatically reviewing your pull requests and enhancing them with additional info, such as summary, comments, suggestions.

Disclaimer: I am a big fan of CodeRabbit, and am grateful they partnered on this article. However, I will try to only write my honest opinion on this topic of code review practices using AI and tools like CodeRabbit.

Team vs Solo

Likely, in a team, there are already processes in place that involve some code review step. It could be a slow and blocking phase. Developers need to switch context to review someone else’s code, then spend a lot of time writing the summary of their own PR. I honestly remember days when half of my day was about reviewing the code, I am sure it would be much faster nowadays was I using something like CodeRabbit.

But I also wanted to talk about the projects where there is no clear concept of a “team” (for example an OSS project), or it’s your own solo project.

For example, as a maintainer of an open source software, you may receive some pull request and spend a lot of your free time understanding what the change is about, then ditching obvious errors, etc.

And exactly here I see a great value of tools like CodeRabbit.

Real example

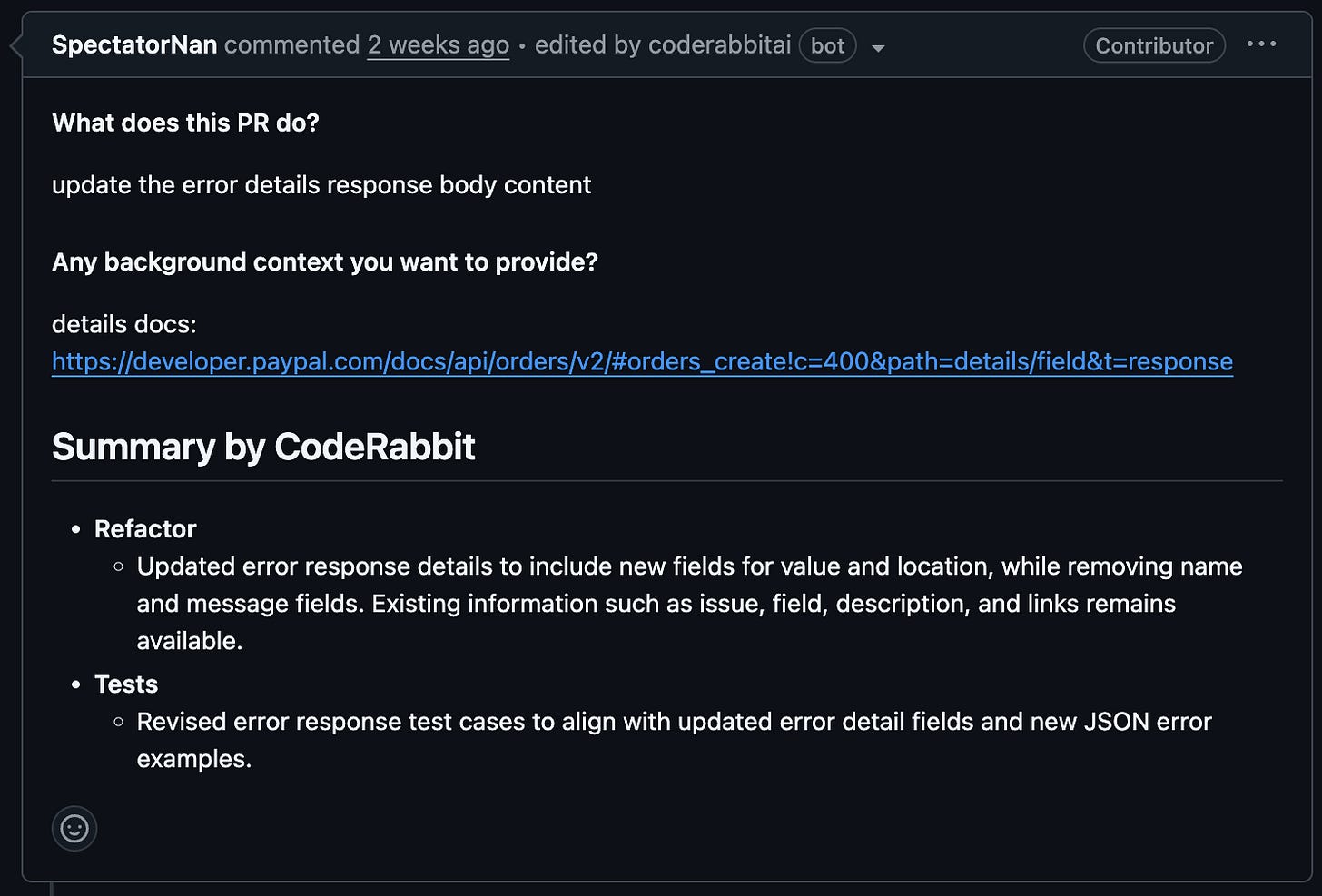

Let’s take an example from a real project I’m maintaining - plutov/paypal. Few days ago I received a new PR from a contributor and when I opened it, it had a nice summary:

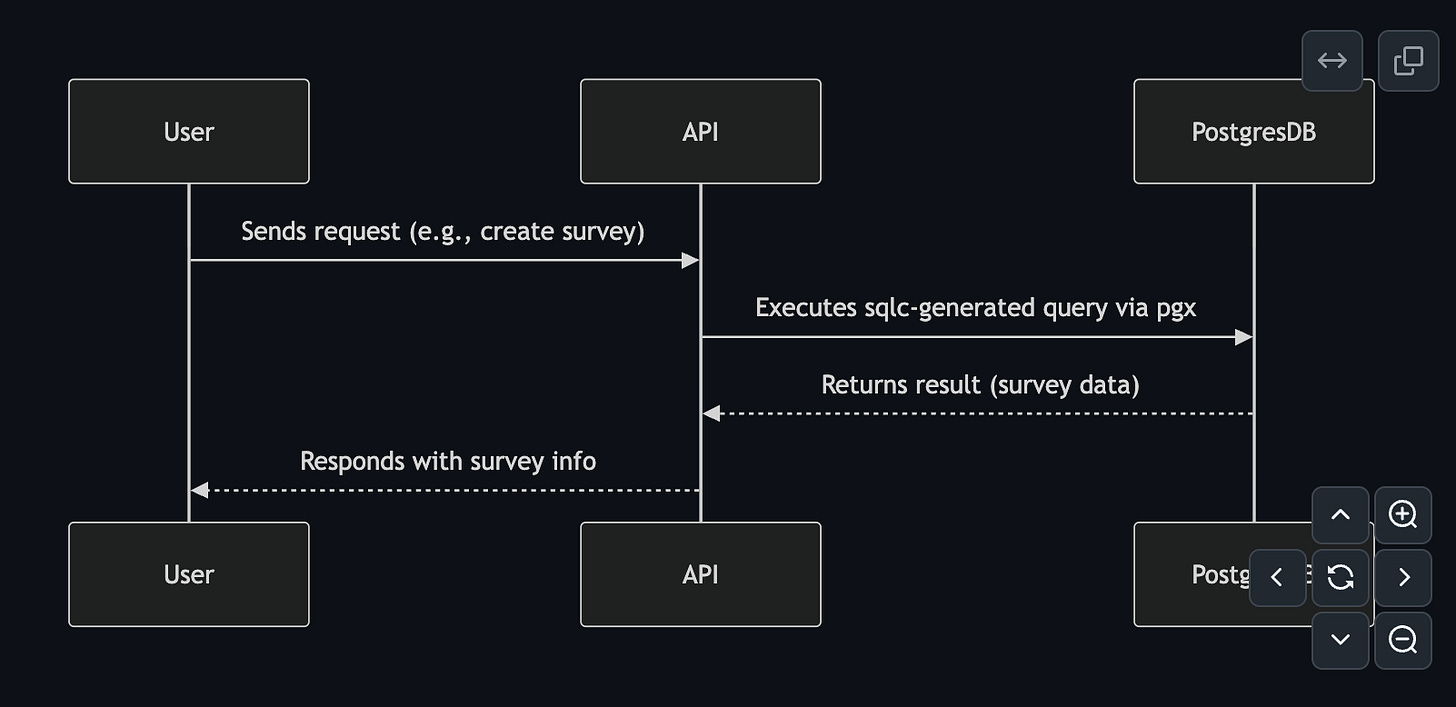

That helped both me and the author of a PR. On another PR CodeRabbit was able to generate a sequence diagram to explain the database comms, isn’t that cool?

And you can also work with CodeRabbit directly in your VSCode (no Neovim yet unfortunately).

Conclusion

In conclusion, the integration of AI into the code review process isn't about replacing humans, but rather augmenting them by automating routine tasks and enabling engineers to concentrate on higher-order concerns like architecture, business logic, scalability.

And if you want to learn more about CodeRabbit, check the link below, it’s free.