Terminating elegantly: a guide to graceful shutdowns

Let's dive into the world of graceful shutdowns, specifically for Go applications running on Kubernetes.

Did you ever unplug your computer because you were frustrated? In the world of software, a similar concept exists: the hard shutdown.

This abrupt termination can cause problems like data loss or system instability.

Thankfully, there's a better way: the graceful shutdown.

In a nutshell, a graceful shutdown is a polite way of stopping a program, giving it time to finish things up neatly.

A good graceful shutdown has the following characteristics:

complete ongoing requests (tasks)

release critical resources

potentially save state information to a disk or a database (so you can resume later)

stop accepting connections

So let's dive into the world of graceful shutdowns, specifically for Go applications running on Kubernetes. We will be focusing on HTTP servers, but the main ideas apply to all types of applications, also not necessarily running on Kubernetes.

Signals in Unix Systems

One of the key tools for achieving graceful shutdown in Unix-based systems is the concept of signals, which are software interrupts sent to a program to indicate that an important event has occurred.

These signals can be sent from the user (Ctrl+C / Ctrl+\), from another program or process, or from the system itself (kernel / OS), for example a SIGSEGV aka "segmentation fault" is sent by the OS.

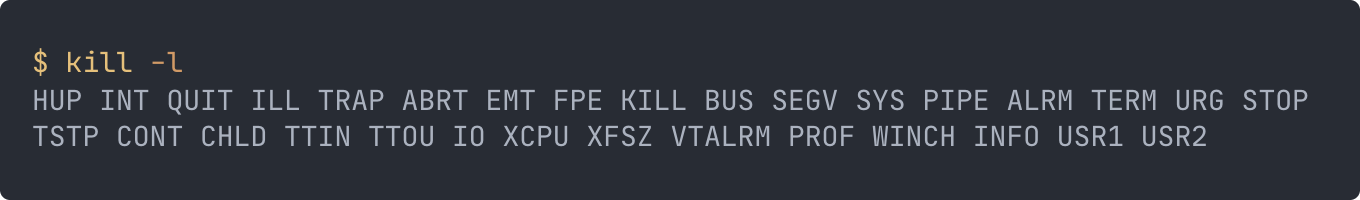

There are many signals, and you can find them here, but our concern is only shutdown signals:

SIGTERM - sent to a process to request its termination. Most commonly used, and we’ll be focusing on it later.

SIGKILL - “quit immediately”, can not be interfered with.

SIGINT - interrupt signal (such as Ctrl+C)

SIGQUIT - quit signal (such as Ctrl+D)

Default behaviour in Go

So what happens when we start a long-running Go program in the terminal and then press Ctrl+C? By default, a SIGHUP, SIGINT, or SIGTERM signal causes the program to exit. Unless you catch the signal.

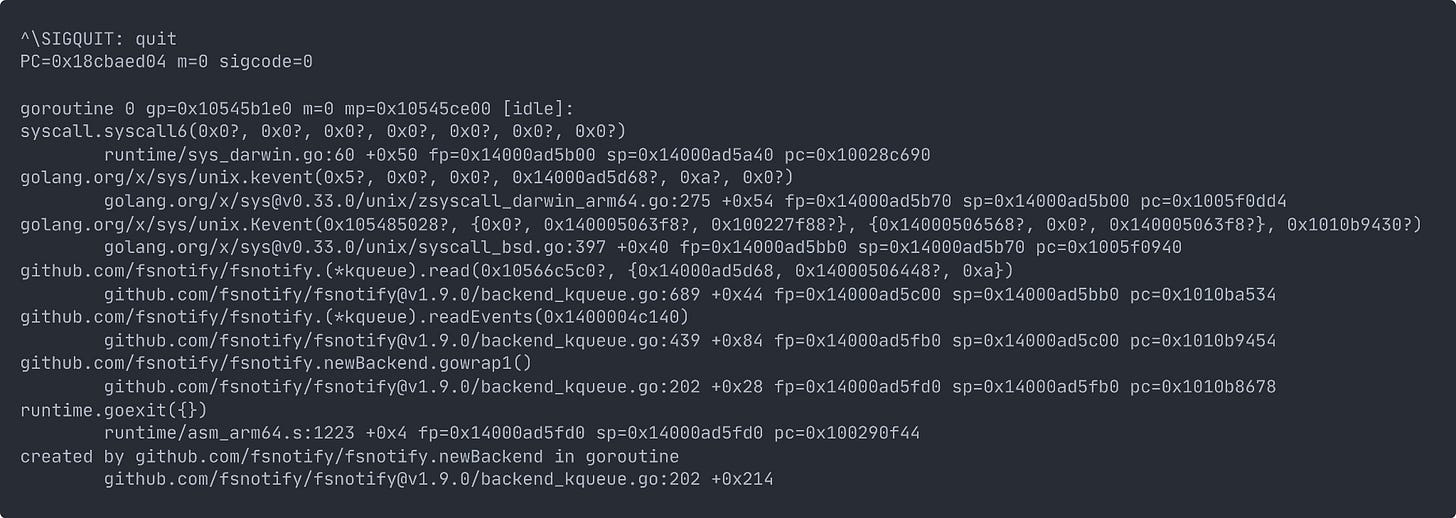

Also, when the Go runtime receives SIGQUIT (Ctrl + \), it prints a stack trace to the terminal before exiting the process. This can be helpful for debugging a hanging unresponsive program.

Can be controlled with GOTRACEBACK env var.

Kubernetes Pod Shutdown Process

So, why did we talk about Signals? That's because Kubernetes also uses them for shutting down the pods.

Before coming to the Go application part, let's quickly review what happens behind the scenes when a Kubernetes pod shuts down?

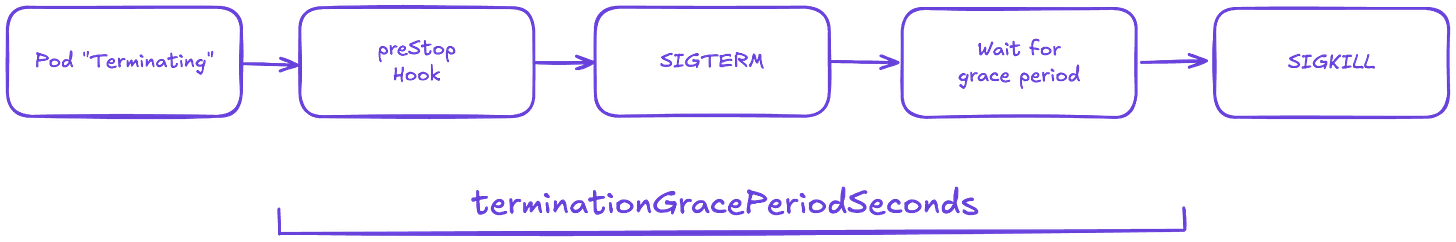

When a pod is terminated, it involves a well-defined lifecycle.

Kubernetes also gives the pods time to finish serving in-progress requests and shut down cleanly before removing them.

Here is the diagram:

Pod is set to the “Terminating” State and removed from the endpoints list of all Services. At this point, the pod stops getting new traffic. Containers running in the pod will not be affected.

preStop Hook is executed if defined. The preStop Hook is a special command or http request that is sent to the containers in the pod. Useful if you are using third-party code or are managing a system you don’t have control over, great way to trigger a graceful shutdown without modifying the application.

SIGTERM signal is sent to process 1 inside each container. Your code should listen for this event and start shutting down cleanly at this point. This may include stopping any long-lived connections (like a database connection or WebSocket stream), saving the current state, or anything like that. Even if you are using the preStop hook, it is important that you test what happens to your application if you send it a SIGTERM signal, so you are not surprised in production!

At this point, Kubernetes waits for a specified time called the termination grace period. By default, this is 30 seconds. It’s important to note that preStop hook must complete its execution before the TERM signal can be sent.

When the grace period expires, if there is still any container running in the Pod, the kubelet triggers forcible shutdown. The container runtime sends SIGKILL to any processes still running in any container in the Pod. At this point, all Kubernetes objects are cleaned up as well.

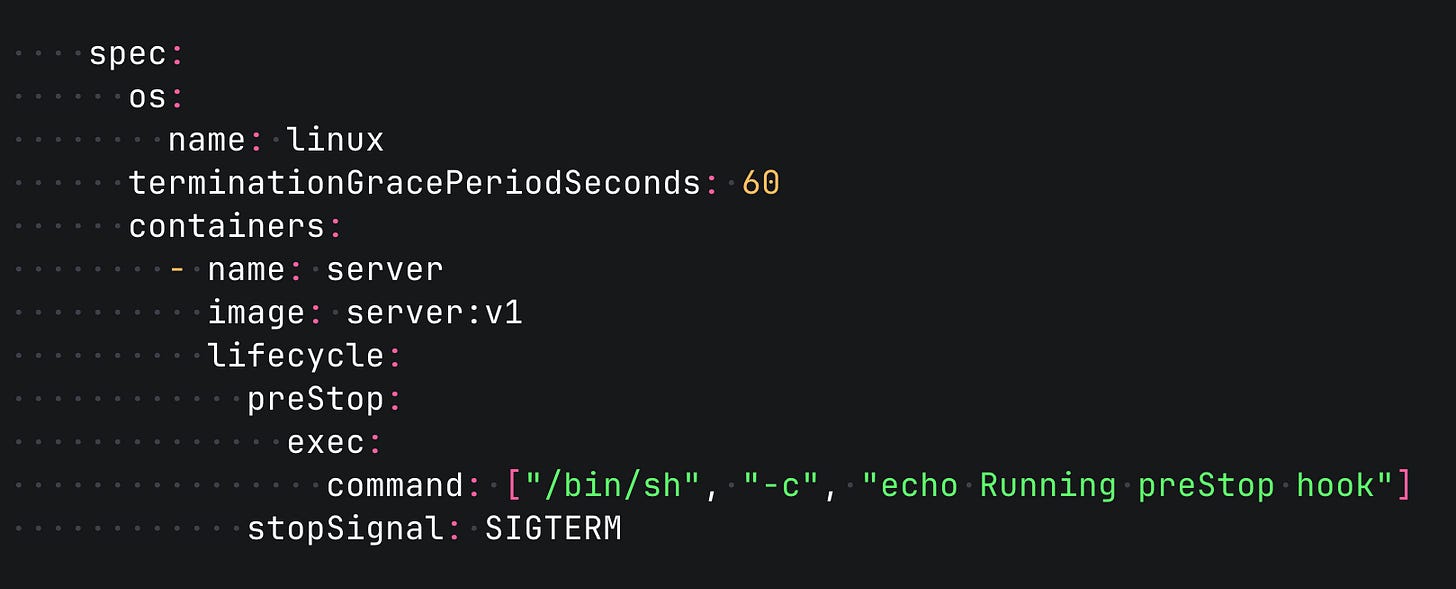

Here is how your container lifecycle may look like with a preStop hook. Note that you can actually specify another signal instead of SIGTERM.

It is a good practice to reserve a bit more time as a safety margin. You can do that by setting the terminationGracePeriodSeconds option.

Go Application

Now, with all that knowledge, knowing the basics of signals and Kubernetes Pod lifecycle, let's design the ultimate (probably) shutdown flow in Go HTTP service.

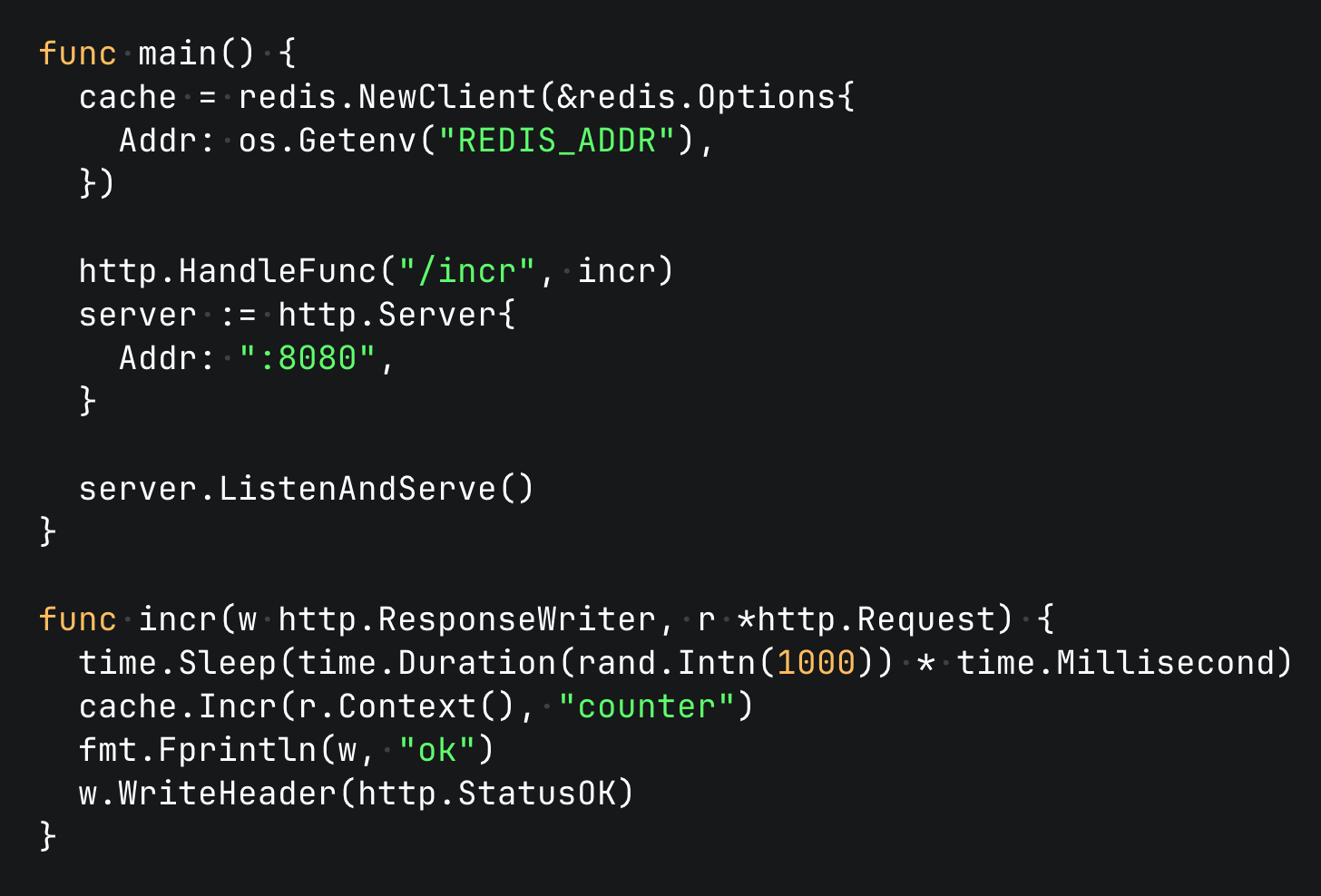

Our base HTTP server

To explore the world of graceful shutdowns in a practical setting, let's create a simple service we can experiment with. This "guinea pig" service will have a single endpoint that simulates some real-world work (we’ll add a slight delay) by calling Redis's INCR command. We'll also provide a basic Kubernetes configuration to test how the platform handles termination signals.

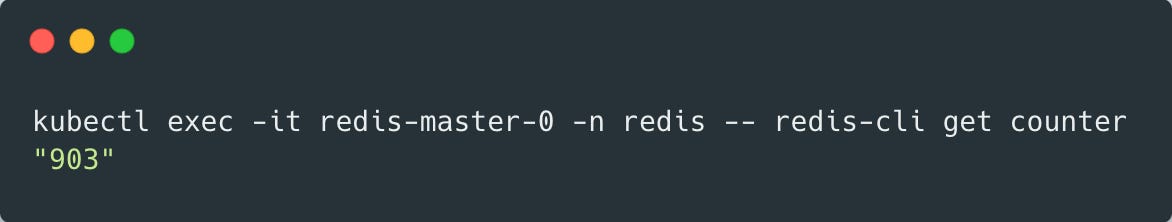

The ultimate goal: ensure our service gracefully handles shutdowns without losing any requests/data. By comparing the number of requests sent in parallel with the final counter value in Redis, we'll be able to verify if our graceful shutdown implementation is successful.

We won’t go into details of setting up the Kubernetes cluster and Redis, but you can find the full setup in this Github repository.

The verification process is the following:

Deploy Redis and Go application to Kubernetes.

Use vegeta to send 1000 requests (25/s over 40 seconds).

While vegeta is running, initialize a Kubernetes Rolling Update by updating image tag.

Connect to Redis to verify the “counter“, it should be 1000.

Let’s start with our base Go HTTP Server.

When we run our verification procedure using this code we’ll see that some requests fail and the counter is less than 1000 (the number may vary each run).

Which clearly means that we lost some data during the rolling update. 😢

It also has other potential downsides at scale:

Potential “connection reset” errors during the deployment

Not closed resources (Redis connection)

Missing or dirty data (especially if you don’t use transactions). Redis counter in our case

Catching a Signal

So how can we know that our program needs to shutdown?

We could use preStop hook, but because we control the application, it's better to subscribe to a signal.

Go provides a signal package that allows you to handle Unix Signals. It’s important to note that by default, SIGINT and SIGTERM signals cause the Go program to exit. And in order for our Go application not to exit so abruptly, we need to handle incoming signals.

There are two options to do so.

Using channel:

c := make(chan os.Signal, 1)

signal.Notify(c, syscall.SIGTERM)Using context (preferred approach nowadays):

ctx, stop := signal.NotifyContext(context.Background(), syscall.SIGTERM)

defer stop()NotifyContext returns a copy of the parent context that is marked done (its Done channel is closed) when one of the listed signals arrives, when the returned stop() function is called, or when the parent context's Done channel is closed, whichever happens first.

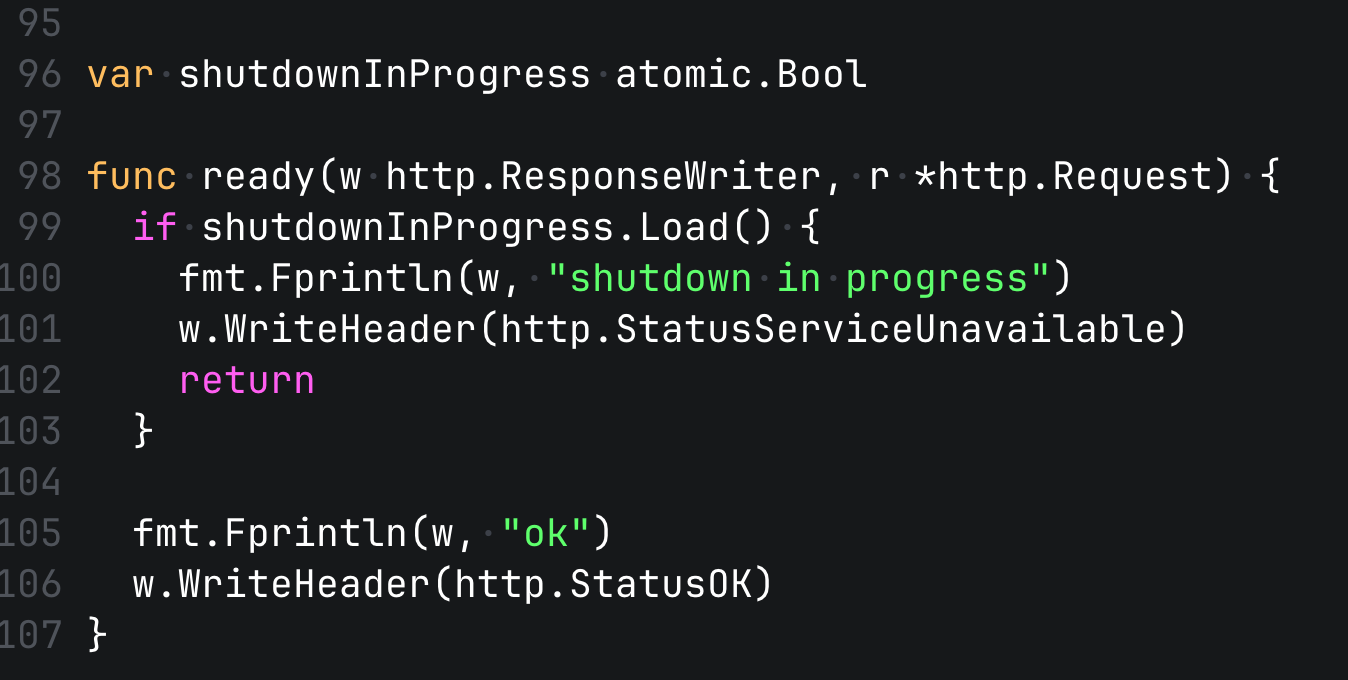

Readiness Probe

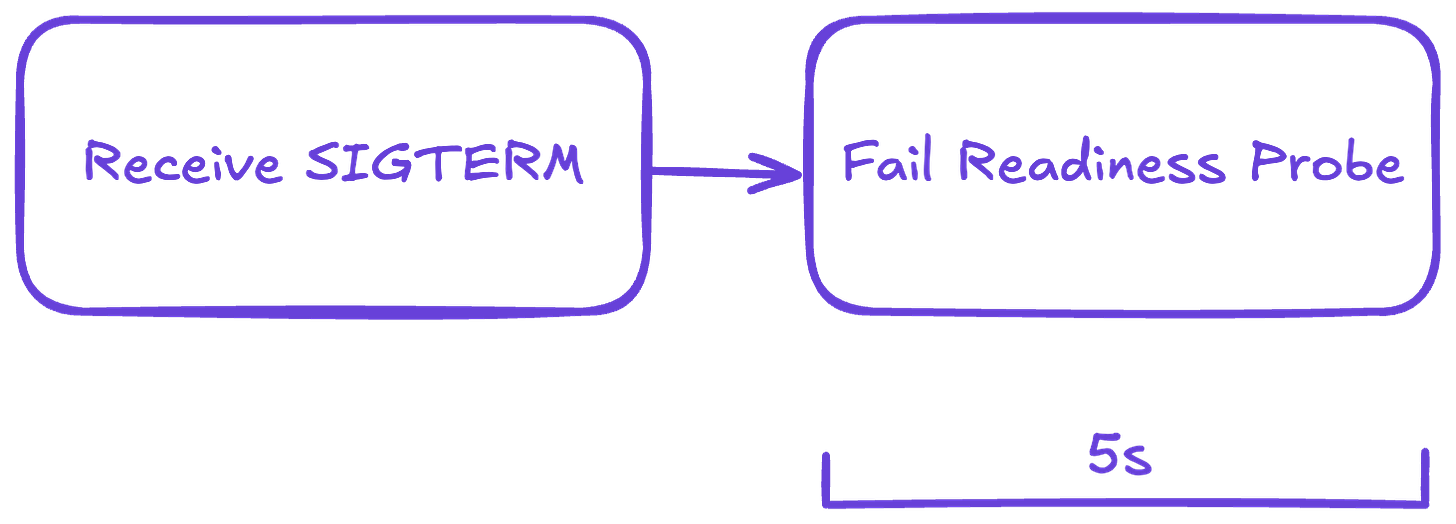

You would assume that if we received a SIGTERM from k8s, the container doesn't receive any traffic. However, even after a pod is marked for termination, it might still receive traffic for a few moments. For example in case of external load balancers, which could rely on the readiness probe.

To avoid connection errors during this short window, the correct strategy is to fail the readiness probe first. This tells the orchestrator that your pod should no longer receive traffic.

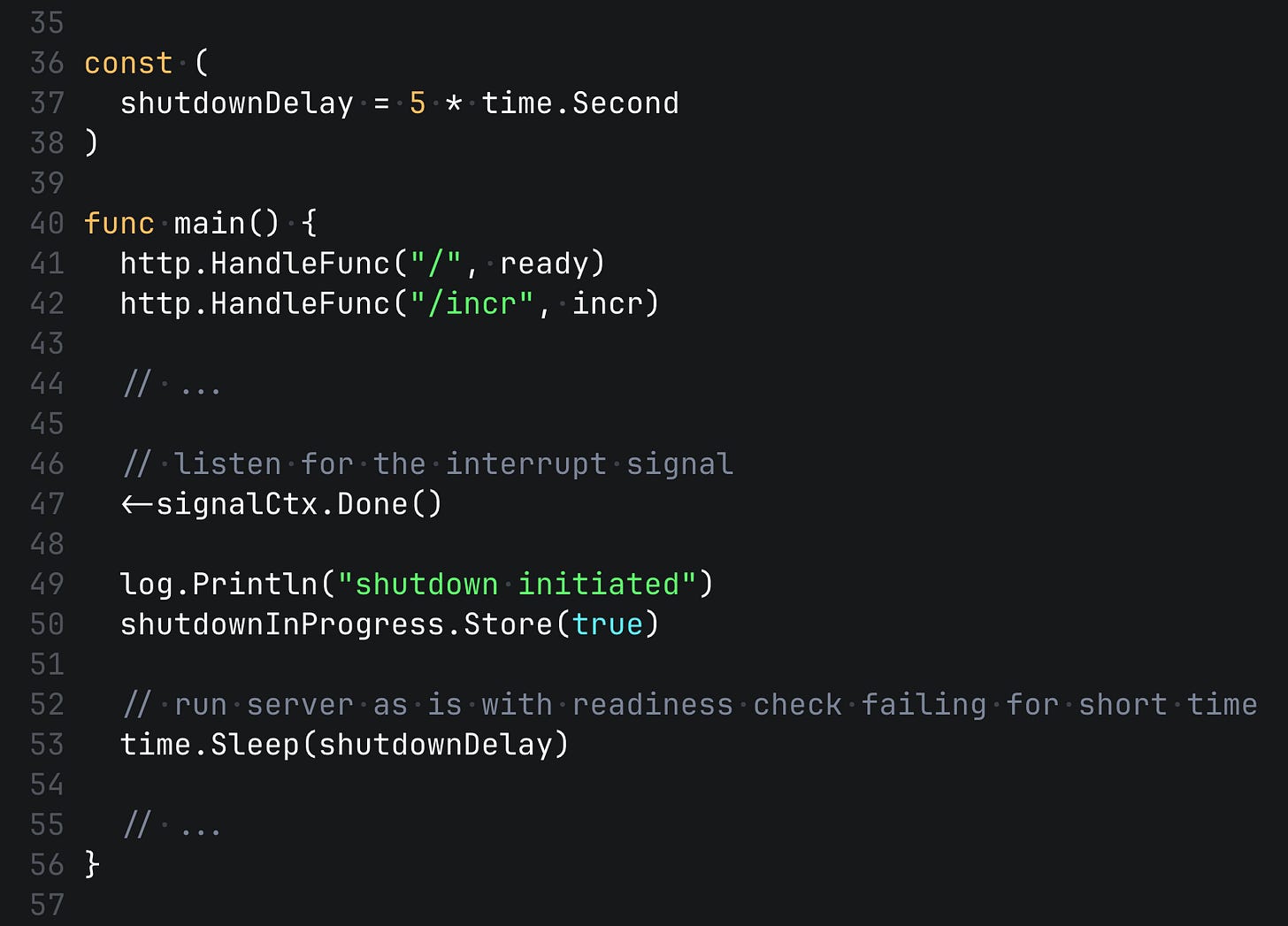

We can nicely use atomics here to switch the readiness status when we receive the SIGTERM.

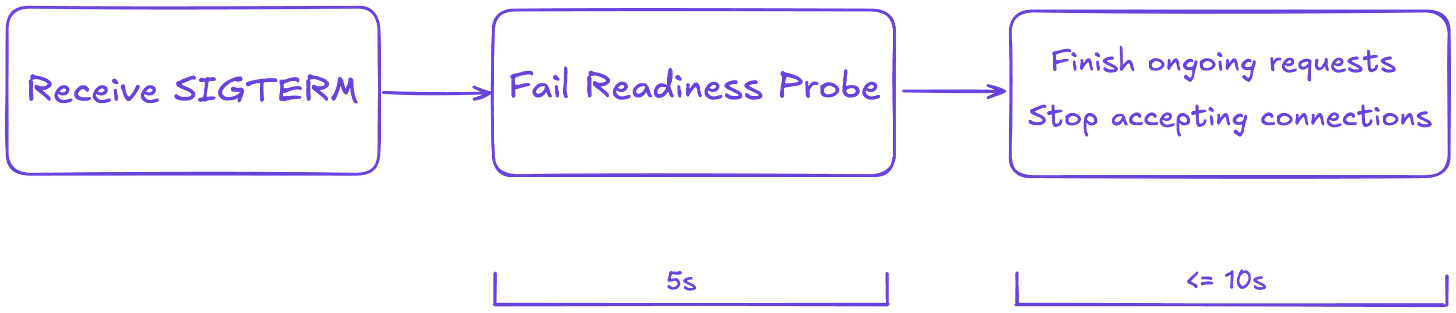

So at first, we run the server as is with readiness check failing for a short time, let's say 5 seconds, which we can configure ideally.

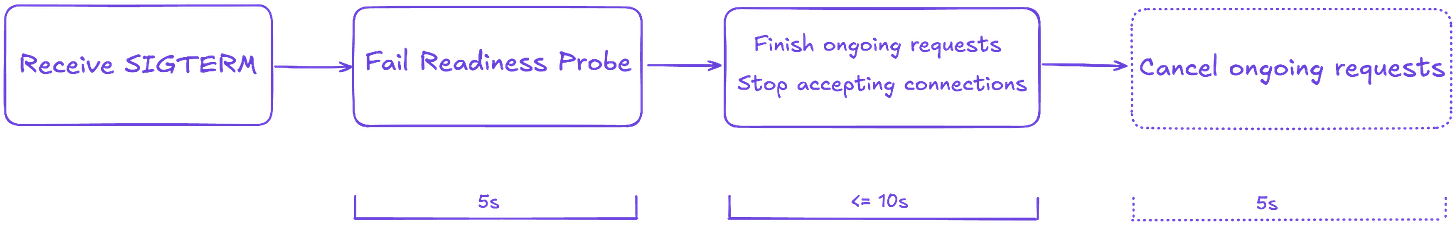

Here is the digram of the process at the moment.

Server Shutdown

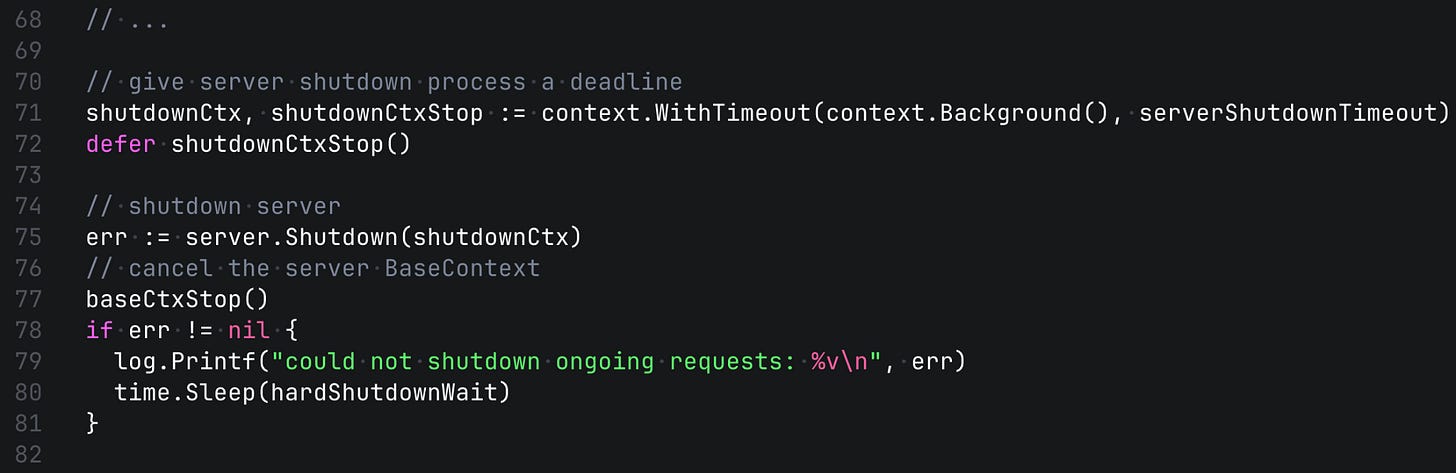

Ok, now we assume that there are no more incoming connections. The next logical step is to stop accepting new requests and finish the pending ones.

We can do it using Shutdown(ctx) function of http.Server. And since we want to control the shutdown process duration, we can also set a deadline, let's say 10 seconds to do this step.

As you can see we also extend the shutdown process in case of an error. It means that not all pending requests were able to finish in 10 seconds. And it's time to cancel them by sending the cancellation. And again, giving this sub-process some defined time (5s).

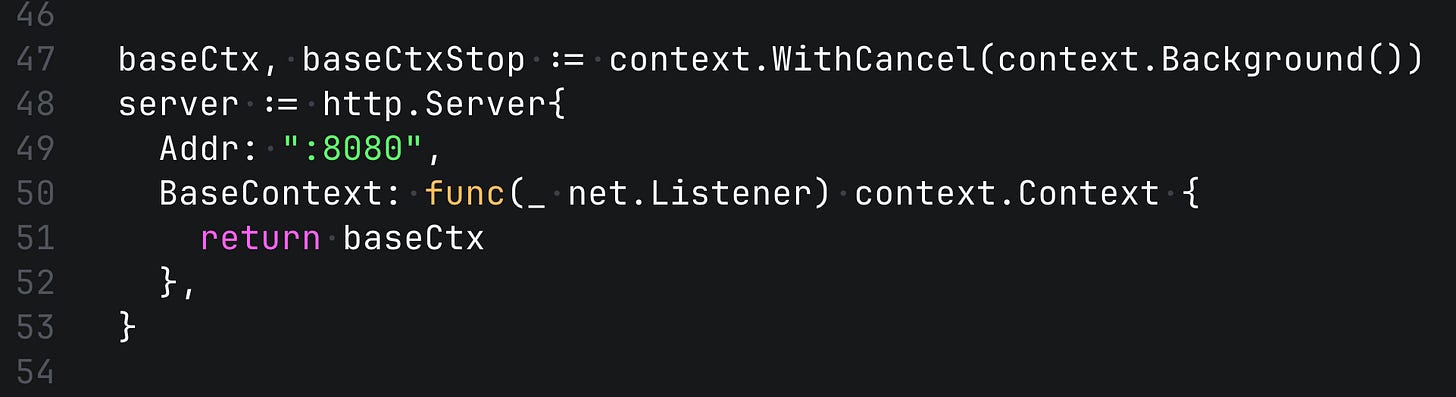

BaseContext

http.Server accepts the BaseContext. This context is shared across all incoming requests, and it can be handy during the shutdown process, for example to send the cancellation if there are still any running requests.

It's worth mentioning that if you want to make a shutdown process really smooth, all your functions should respect context cancellation and ideally not use context.Background() / context.TODO(). Because functions that use context.Background() don't respect cancellations.

Then the functions down the line can also do a proper cleanup, like rollback a transaction for example, etc.

Release Resources

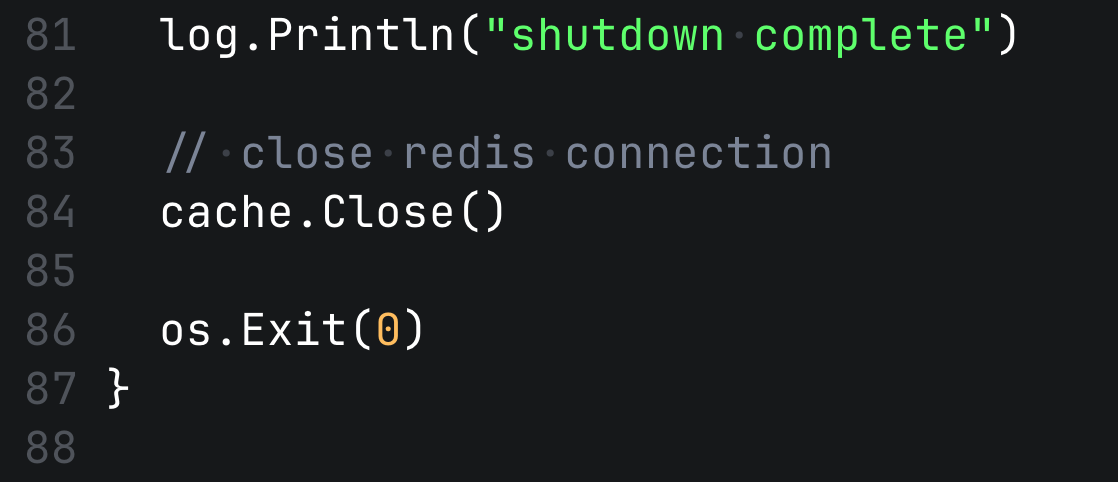

And as the last action we release critical resources, connections to databases, caches, etc. Close file descriptors, etc. You should delay the resource cleanup until the shutdown timeout has passed or all requests are done. Also it's good to specify the exit code explicitly.

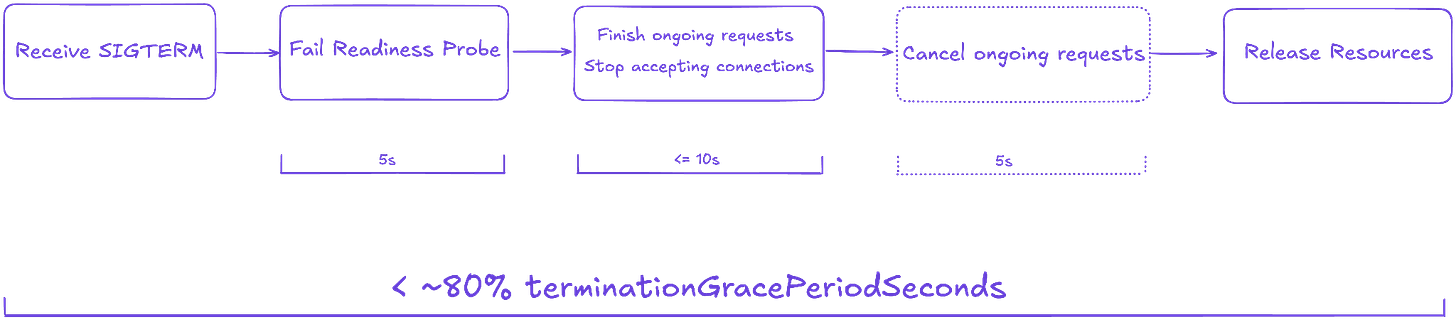

Full diagram finally. It doesn't have to take a lot of time, and you can balance the timeouts based on your service needs.

Although we focused on HTTP servers, the main ideas apply to all types of applications, also not necessarily running on Kubernetes, because it's based on fundamental principles such as UNIX signals.

Conclusion

For those who are interested in testing this out, I prepared a full project with Kubernetes manifest and some load testing. There we can clearly see that the server without a graceful shutdown loses some portion of requests during the rolling update. While the server with - doesn't.

You can find the Go code and Kubernetes manifests in this Github repository.