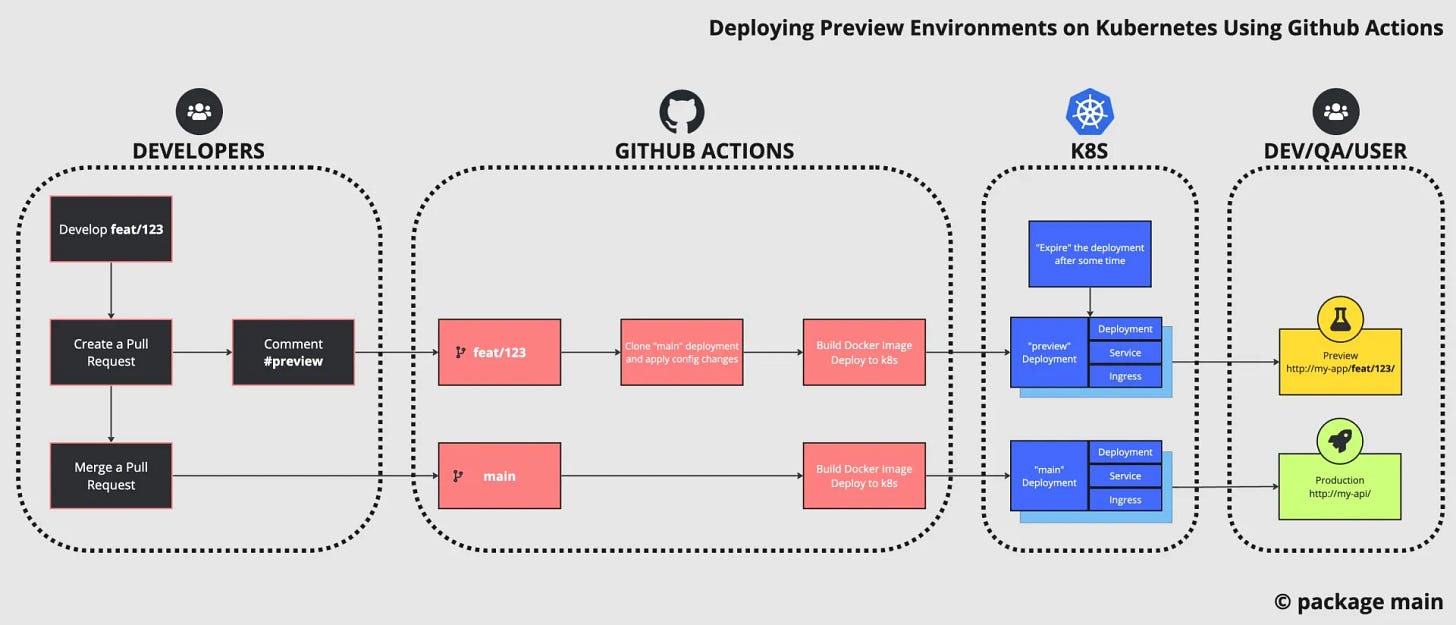

How to Deploy Preview Environments on Kubernetes with GitHub Actions

Testing deployed code before it's merged in total isolation from your real traffic.

Introduction

Preview environments

Preview environments are a game-changer in modern software development, offering a multitude of benefits that significantly enhance the development workflow.

They provide a safe and isolated space where developers can test and debug code changes before they are merged into the main branch.

It enables faster feedback loops and more efficient collaboration among team members where designers, product managers and QA testers can provide valuable feedback on new features.

Moreover, preview environments are ephemeral, meaning they are created and destroyed as needed, which helps in reducing costs and resource wastage.

They integrate seamlessly with CI/CD pipelines, enabling continuous integration and deployment, and support performance testing to ensure the application can handle expected loads.

Overall, preview environments streamline the development process, enhance productivity, and ultimately lead to faster, more reliable software releases.

Article overview

In this article, we will cover how to setup Preview Environments for Backend services and APIs.

Kubernetes, Docker and Github Actions are central pieces of this article, though any CI/CD should work as long as you can run some command lines in a shell.

This setup is completely language agnostic since it’s all about Kubernetes configuration.

However, we won’t cover how to setup an entire Kubernetes cluster with Terraform, Helm or whatnot. The prerequisite is that you have at least one environment deployed and working with Kubernetes Deployments.

The Frontend case

We won’t cover Frontend projects because a lot of Frontend Clouds provide Previews as part of their offering. (Vercel, Firebase etc..)

Concepts

Kubernetes

Your API deployed in Kubernetes is usually represented by different resources that reference each other namely: Deployment, Ingress and Service

There are others like Secrets, HorizontalPodAutoscaler etc. but we won’t need to alter any of them for our Preview Environments.

Let’s break it down and understand what they represent:

Environment variables

CPU / RAM configuration

Docker image and version

Ingress (optional):

Rules on how to access your service

It’s optional because some services like gRPC should probably stay internal to your cluster. They will still get an internal address thanks to the Service described below.

Method for exposing a network application that is running as one or more Pods in your cluster.

See this as an internal address to contact the different pods that compose your deployment.

These are simply YAML configurations, they can be copied and edited. This is the essence of our technique to build preview environments on the fly.

Docker

Kubernetes Pods run docker images, therefore it doesn’t care what programming language or dependencies need to be installed. It’s all done inside your Docker image.

As you build different versions of your code, you will push to your Registry different versions of your docker image. And that’s wonderful because this is versioned and we will simply have to replace the version of your docker image inside the Deployment configuration which looks something like:

spec:

containers:

- name: my-api

image: pltvs/substack-01-backend-previews:v1.0.0v1.0.0 is semantic versioning and is great for when you release on production, however for Pull Request made on staging, you could simply use the git short SHA pltvs/substack-01-backend-previews:43121d4

Github Actions (or any CI/CD)

Github Actions provide workflows, which are useful for not repeating over and over the same steps.

Here an example (pseudo code) of Github Actions you could have in your repository that use 3 workflows to:

Detect if the Pull Request got a #preview comment

It’s to avoid building a Preview systematically (thing you could do if you need)

The comment can also be used to change environment variables on the fly while deploying the Backend Previews.

Build the Docker image

It can be useful to tag the Docker image with the branch name on top of the git short SHA.

Deploy the Kubernetes preview

jobs:

comments:

outputs:

- triggered: ${{ steps... }}

steps:

- uses: khan/pull-request-comment-trigger@v1.1.0

with:

trigger: '#preview'

build:

uses: myworkflows/docker-build-push.yml

needs: [comments]

if: needs.comments.outputs.triggered == 'true'

with:

repository: ${{ github.event.repository.name }}

preview:

uses: myworkflows/preview.yaml

needs: [comments, build]

with:

target: my-api-staging

docker-tag: ${{ needs.build.outputs.sha-short }}

Step-by-Step

Github Action

Let’s go bit by bit to describe our main Github Action that does 3 things (check comments, build docker image, deploy a Kubernetes preview).

.github/workflows/substack01.yaml

name: substack01-backend-previews

on:

pull_request:

types: [opened, synchronize]

issue_comment:

types: [created, edited]

workflow_dispatch:

jobs:

comments:

runs-on: ubuntu-latest

outputs:

triggered: ${{ steps.check-preview.outputs.triggered }}

config: ${{ steps.check-preview.outputs.comment_body }}

steps:

- uses: khan/pull-request-comment-trigger@v1.1.0

id: check-preview

with:

trigger: '#preview'

env:

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

build:

uses: .github/workflows/substack01-build.yaml@master

needs: [comments]

if: needs.comments.outputs.triggered == 'true'

secrets:

gh-token: ${{ secrets.GITHUB_TOKEN }}

dh-username: ${{ secrets.DOCKERHUB_USERNAME }}

dh-token: ${{ secrets.DOCKERHUB_TOKEN }}

with:

repository: pltvs/substack-01-backend-previews

preview:

uses: .github/workflows/substack01-preview.yaml@master

needs: [comments, build]

if: needs.comments.outputs.triggered == 'true'

secrets:

k8s-sa: ${{ secrets.K8S_SERVICE_ACCOUNT }}

with:

target: my-api

docker-tag: ${{ needs.build.outputs.sha-short }}

cluster: staging

expiration: 3

comment: ${{ needs.comments.outputs.config }}Nothing too complicated, this is like the pseudo code above.

For convenience, the reusable Workflows are in the same repository. However, it would make a lot of sense to have a separate Github repository (even private) to share those amongst your different projects.

The following part is long and hard to digest, make a coffee and get ready :)

.github/workflows/substack01-build.yaml

name: build

on:

workflow_call:

secrets:

gh-token:

required: true

dh-username:

required: true

dh-token:

required: true

inputs:

repository:

required: true

type: string

description: Docker hub repository's name

context:

type: string

description: relative folder to find Dockerfile

default: .

outputs:

branch-name:

value: ${{ jobs.docker-push.outputs.branch-name }}

description: name of the branch

git-sha:

value: ${{ jobs.docker-push.outputs.git-sha }}

description: short SHA of the commit

jobs:

docker-push:

runs-on: ubuntu-latest

outputs:

git-sha: ${{ steps.git-context.outputs.git-sha }}

branch-name: ${{ steps.git-context.outputs.branch-name }}

steps:

- name: Retrieve git context metadata

shell: bash

id: git-context

env:

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

if [[ $GITHUB_REF = *refs/heads/* ]]; then

echo "branch-name=$GITHUB_REF_NAME" >> $GITHUB_OUTPUT

echo "git-sha=`echo $GITHUB_SHA | cut -c1-7`" >> $GITHUB_OUTPUT

elif [[ "${{ github.event_name }}" == "issue_comment" ]]; then

export RESPONSE=`curl -s --request GET --url "${{ github.event.issue.pull_request.url }}" --header "Authorization: Bearer ${{ env.GH_TOKEN }}"`

echo "branch-name=`echo $RESPONSE | jq -r .head.ref`" >> $GITHUB_OUTPUT

echo "git-sha=`echo $RESPONSE | jq -r .head.sha | cut -c1-7`" >> $GITHUB_OUTPUT

else

echo "branch-name=$GITHUB_HEAD_REF" >> $GITHUB_OUTPUT

echo "git-sha=`echo ${{ github.event.pull_request.head.sha }} | cut -c1-7`" >> $GITHUB_OUTPUT

fi

- uses: actions/checkout@v4

with:

ref: ${{ steps.git-context.outputs.branch-name }}

- name: Login to Docker Hub

uses: docker/login-action@v3

with:

username: ${{ secrets.dh-username }}

password: ${{ secrets.dh-token }}

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Build and Push image

uses: docker/build-push-action@v5

with:

context: ${{ inputs.context }}

file: ${{ inputs.context }}/Dockerfile

push: true

tags: |

${{ inputs.repository }}:${{ steps.git-context.outputs.git-sha }}

${{ inputs.repository }}:${{ steps.git-context.outputs.branch-name }}

build-args: |

GH_ACCESS_TOKEN=${{ secrets.gh-token }}

secrets: |

GIT_AUTH_TOKEN=${{ secrets.gh-token }}

env:

DOCKER_BUILDKIT: 1This is a pretty standard except the step that retrieve the “git context metadata”, this is necessary because depending on what triggered this workflow, you will access the short SHA and branch name in different ways.

Reference on Github Actions variables

Let’s explain this a little bit:

if [[ $GITHUB_REF = *refs/heads/* ]]; then

echo "branch-name=$GITHUB_REF_NAME" >> $GITHUB_OUTPUT

echo "git-sha=`echo $GITHUB_SHA | cut -c1-7`" >> $GITHUB_OUTPUTThis condition is not actually useful in this article, because we restrict our Github Action to work only for Pull Requests or Comments. But this Reusable Workflow could be used in a Push context, and that’s when it would make it generic.

Essentially it checks if we are in the context of a Push.

In the case of a Pull Request it provides the “merge branch” which is not the one we want

$GITHUB_REF

The fully-formed ref of the branch or tag that triggered the workflow run.

For workflows triggered by push, this is the branch or tag ref that was pushed.

For workflows triggered by pull_request, this is the pull request merge branch.

elif [[ "${{ github.event_name }}" == "issue_comment" ]]; then

export RESPONSE=`curl -s --request GET --url "${{ github.event.issue.pull_request.url }}" --header "Authorization: Bearer ${{ env.GH_TOKEN }}"`

echo "branch-name=`echo $RESPONSE | jq -r .head.ref`" >> $GITHUB_OUTPUT

echo "git-sha=`echo $RESPONSE | jq -r .head.sha | cut -c1-7`" >> $GITHUB_OUTPUTWell, if the trigger is a comment on our Pull Request, then unfortunately we don’t have any information about this Pull Request in the current context. So, the only way to get that is to do a cURL and retrieve that PR metadata.

It extracts short SHA and branch name, branch name will be used to tag docker images with something you can refer to instead of a random string of 7 characters. Can be useful for debugging or finding back the latest version of your Preview docker image.

else

echo "branch-name=$GITHUB_HEAD_REF" >> $GITHUB_OUTPUT

echo "git-sha=`echo ${{ github.event.pull_request.head.sha }} | cut -c1-7`" >> $GITHUB_OUTPUTIn this case this is a Pull Request and so we can get the HEAD branch directly from $GITHUB_HEAD_REF and the short SHA from the event.

$GITHUB_HEAD_REF

The head ref or source branch of the pull request in a workflow run. This property is only set when the event that triggers a workflow run is either pull_request or pull_request_target. For example, feature-branch-1.

.github/workflows/substack01-preview.yaml

Part 1:

name: kubernetes-preview

on:

workflow_call:

secrets:

k8s-sa:

required: true

description: GCP Service Account with kubernetes permissions

inputs:

target:

required: true

type: string

description: K8s deployment name that will be used to copy configuration from

docker-tag:

required: true

type: string

description: Docker tag to deploy

cluster:

required: true

type: string

description: Cluster name

zone:

type: string

description: Cluster zone

default: us-west1-c

expiration:

type: string

description: Number of days after which the preview expires and is removed

default: "5"

comment:

type: string

description: environment variables to change

default: ""

namespace:

type: string

description: namespace of your deployment

default: defaultWe could add some outputs, to be able to chain this workflow to another one but we want to keep it simple because it’s already big by itself.

Part 2:

jobs:

preview-deployment:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- id: gcp-auth

uses: google-github-actions/auth@v2

with:

credentials_json: ${{ secrets.k8s-sa-json }}

- name: Set up Cloud SDK

uses: google-github-actions/setup-gcloud@v2

with:

install_components: 'gke-gcloud-auth-plugin'

- name: "Connect to cluster"

uses: google-github-actions/get-gke-credentials@v2

with:

cluster_name: ${{ inputs.cluster }}

location: ${{ inputs.zone }}

project_id: ${{ secrets.gcp-project-id }}

This second part is mostly setup to connect to Authenticate with GCP, setting up the Cloud SDK and connect to the right Cluster. Then

Part 3:

- name: Get PR number

id: pr

env:

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

if [[ "${{ github.event_name }}" == "issue_comment" ]]; then

export JSON_RESP=`curl -s --request GET --url "${{ github.event.issue.pull_request.url }}" --header "Authorization: Bearer ${{ env.GH_TOKEN }}"`

echo "pr-number=`echo $JSON_RESP | jq -r .number`" >> $GITHUB_OUTPUT

else

echo "pr-number=`echo ${{ github.event.issue.pull_request.number }}`" >> $GITHUB_OUTPUT

fi

- name: Get deployment-name

id: deployment-name

run: |

echo "name=${{ inputs.target }}-${{ steps.pr.outputs.pr-number }}" >> $GITHUB_OUTPUT

- name: Get expiration timestamp

id: expiration-ts

run: |

expiration=${{ inputs.expiration}}

if [[ ${{ inputs.expiration }} -gt 8 ]] || [[ ${{ inputs.expiration }} -lt 1 ]]; then

expiration=5

fi

t=`date -d "+$expiration day" +"%s"`

echo "timestamp=$t" >> $GITHUB_OUTPUT

echo "date-end=`date -ud @$t`" >> $GITHUB_OUTPUTThere are some pre-work to do, in order to gather some information, namely:

PR number

In order to deploy a new Deployment, it needs to have a unique name, we use the PR number mostly for uniqueness.

Again we have to do the distinction between event being a Pull Request or a comment.

Define the unique Deployment name ($target-$pr_number)

$target is an input that provide which Deployment in Kubernetes we want to clone from. In our case it would be my-api

Expiration time

For the Preview to be ephemeral, it needs to have a way to tell us when it expires. This value will be set as a Kubernetes label in the specs of our Deployment.

Part 4:

- name: Create Deployment

id: kubernetes-deployment

run: |

kubectl delete deployment ${{ steps.deployment-name.outputs.name }} || true

kubectl get deployment ${{ inputs.target }} -o yaml > /tmp/deployment.yaml

yq 'del(.spec.template.spec.initContainers)' -i /tmp/deployment.yaml

yq '(..|select(has("app.kubernetes.io/name")).["app.kubernetes.io/name"]) |= "${{ steps.deployment-name.outputs.name }}"' -i /tmp/deployment.yaml

yq '.metadata.name="${{ steps.deployment-name.outputs.name }}"' -i /tmp/deployment.yaml

yq '.spec.template.spec.containers.[].name += "-${{ steps.pr.outputs.pr-number }}"' -i /tmp/deployment.yaml

yq '.metadata.labels.preview="true"' -i /tmp/deployment.yaml

yq '.metadata.labels.expiration="${{ steps.expiration-ts.outputs.timestamp }}"' -i /tmp/deployment.yaml

yq '.metadata.labels.pr-number="${{ steps.pr.outputs.pr-number }}"' -i /tmp/deployment.yaml

yq '(..|select(has("image")).["image"]) |= sub(":[a-zA-Z0-9]+", ":${{ inputs.docker-tag }}")' -i /tmp/deployment.yaml

VARS=`echo "${{ inputs.comment }}" | sed "s/#preview//" | sed '/^[[:blank:]]*$/ d'`

echo $VARS

while IFS= read -r line; do

NAME=`echo $line | cut -d "=" -s -f 1 | tr -d '\r'`

VALUE=`echo $line | cut -d "=" -s -f 2 | tr -d '\r'`

if [[ -z "$NAME" ]] || [[ -z "$VALUE" ]]; then

continue

fi

yq ".spec.template.spec.containers.[0].env |= map(select(.name == \"$NAME\") |= del(.valueFrom))" -i /tmp/deployment.yaml

yq ".spec.template.spec.containers.[0].env |= map(select(.name == \"$NAME\").value=\"$VALUE\")" -i /tmp/deployment.yaml

done <<< "$VARS"

cat /tmp/deployment.yaml

kubectl apply -f /tmp/deployment.yamlHere, there is a lot to unpack.

First, if there is an existing deployment with the same name, then we delete it to avoid conflicts. Though if there is none, we don’t want to fail so we do || true.

kubectl delete deployment ${{ steps.deployment-name.outputs.name }} || trueNext step is to extract the Target deployment YAML configuration and write it to the FileSystem for future use:

kubectl get deployment ${{ inputs.target }} -o yaml > /tmp/deployment.yamlThen, we modify some of the lines of this configuration.

For instance, you can remove the initContainers to avoid side effects linked to Database migrations for instance. In some cases you might want to keep them, it all depends what these initContainers do.

It replaces the name of the deployment by the new unique name that contains the PR number.

A lot of the pre-work we did before, gathering some data, is now useful for adding some metadata labels.

And finally, the most important part, it replace the docker image by the version we just built in the previous reusable workflow.

yq 'del(.spec.template.spec.initContainers)' -i /tmp/deployment.yaml

yq '(..|select(has("app.kubernetes.io/name")).["app.kubernetes.io/name"]) |= "${{ steps.deployment-name.outputs.name }}"' -i /tmp/deployment.yaml

yq '.metadata.name="${{ steps.deployment-name.outputs.name }}"' -i /tmp/deployment.yaml

yq '.spec.template.spec.containers.[].name += "-${{ steps.pr.outputs.pr-number }}"' -i /tmp/deployment.yaml

yq '.metadata.labels.preview="true"' -i /tmp/deployment.yaml

yq '.metadata.labels.expiration="${{ steps.expiration-ts.outputs.timestamp }}"' -i /tmp/deployment.yaml

yq '.metadata.labels.pr-number="${{ steps.pr.outputs.pr-number }}"' -i /tmp/deployment.yaml

yq '(..|select(has("image")).["image"]) |= sub(":[a-zA-Z0-9]+", ":${{ inputs.docker-tag }}")' -i /tmp/deployment.yamlThe next part is all about Environment variables, it’s highly likely you will want to change some env vars for your Preview. It can also be useful in case you deploy different services / APIs and want to connect them to each other.

We will see later how do we get the internal and external addresses of our Previews.

VARS=`echo "${{ inputs.comment }}" | sed "s/#preview//" | sed '/^[[:blank:]]*$/ d'`

echo $VARS

while IFS= read -r line; do

NAME=`echo $line | cut -d "=" -s -f 1 | tr -d '\r'`

VALUE=`echo $line | cut -d "=" -s -f 2 | tr -d '\r'`

if [[ -z "$NAME" ]] || [[ -z "$VALUE" ]]; then

continue

fi

yq ".spec.template.spec.containers.[0].env |= map(select(.name == \"$NAME\") |= del(.valueFrom))" -i /tmp/deployment.yaml

yq ".spec.template.spec.containers.[0].env |= map(select(.name == \"$NAME\").value=\"$VALUE\")" -i /tmp/deployment.yaml

done <<< "$VARS"You will notice that, in case one environment variable is a secret and therefore is defined like this:

- name: MONGO_ADDR

valueFrom:

secretKeyRef:

name: {{ .Values.secretName }}

key: mongoDSNFor now, it removes the valueFrom: entirely, and replaces it by a classic value:. This part could be improved.

The comment in your PR should follow this format to work with the shell script

#preview

ENV1=value1

ENV2=value2Last part is easy, it prints it out for debug purposes and we apply the modified YAML file that is stored on the FileSystem.

cat /tmp/deployment.yaml

kubectl apply -f /tmp/deployment.yamlPart 5:

Do the same for Service, but this time it will extract a valuable piece of information which is the internal address of our service:

- name: Create service

id: kubernetes-service

run: |

kubectl delete service ${{ steps.deployment-name.outputs.name }} || true

kubectl get service ${{ inputs.target }} -o yaml > /tmp/service.yaml

yq '.metadata.name="${{ steps.deployment-name.outputs.name }}"' -i /tmp/service.yaml

yq '(..|select(has("app.kubernetes.io/name")).["app.kubernetes.io/name"]) |= "${{ steps.deployment-name.outputs.name }}"' -i /tmp/service.yaml

yq '.metadata.labels.preview="true"' -i /tmp/service.yaml

yq '.metadata.labels.expiration="${{ steps.expiration-ts.outputs.timestamp }}"' -i /tmp/service.yaml

yq '.metadata.labels.pr-number="${{ steps.pr.outputs.pr-number }}"' -i /tmp/service.yaml

PORT=`cat /tmp/service.yaml | yq '.spec.ports.[0].port'`

echo "service-addr=${{ steps.deployment-name.outputs.name }}.${{ inputs.namespace }}:$PORT" >> $GITHUB_OUTPUT

cat /tmp/service.yaml

kubectl apply -f /tmp/service.yamlTo give some insights on what would be an internal service address from Kubernetes and how it would be different with a Preview:

Let’s say your project is on the Namespace “api” and you get usually this service address: my-api.api:8080

The preview would simply add the PR Number to it: my-api-1.api:8080

Part 6:

Again, ingress are not always there because not every service is accessible from the outside, think gRPC service for example. That’s why here, it uses the continue-on-error: true feature.

Similar to the Service, there is important things to extract to know what is the External address of this project.

- name: Create ingress

id: kubernetes-ingress

continue-on-error: true

run: |

kubectl delete ingress ${{ steps.deployment-name.outputs.name }}-ingress || true

kubectl get ingress ${{ inputs.target}}-ingress -o yaml > /tmp/ingress.yaml

yq '.metadata.name="${{ steps.deployment-name.outputs.name }}-ingress"' -i /tmp/ingress.yaml

yq '.spec.rules[].http.paths.[0].backend.service.name="${{ steps.deployment-name.outputs.name }}"' -i /tmp/ingress.yaml

yq '.spec.rules[].http.paths.[0].path|="/previews/${{ steps.pr.outputs.pr-number }}"+.' -i /tmp/ingress.yaml

yq '.metadata.labels.preview="true"' -i /tmp/ingress.yaml

yq '.metadata.labels.expiration="${{ steps.expiration-ts.outputs.timestamp }}"' -i /tmp/ingress.yaml

yq '.metadata.labels.pr-number="${{ steps.pr.outputs.pr-number }}"' -i /tmp/ingress.yaml

yq '.metadata.labels.repo="${{ github.event.repository.name }}"' -i /tmp/ingress.yaml

HOST=`cat /tmp/ingress.yaml | yq '.spec.rules.[0].host'`

ROUTE=`cat /tmp/ingress.yaml | yq '.spec.rules.[0].http.paths.[0].path'`

echo "external-url=$HOST$ROUTE" >> $GITHUB_OUTPUT

cat /tmp/ingress.yaml

kubectl apply -f /tmp/ingress.yamlLet’s imagine you use an API Gateway and your different APIs are behind this subdomain: api.myproject.com

This particular project is using the path: /my-api and therefore you access it this way:

https://api.myproject.com/my-api/endpoint

The preview external URL would then be something like: https://api.myproject.com/preview/1/my-api/endpoint.

Where /1/ is the PR Number.

Final part:

To make it easy to know how and where it’s deployed, the last part is simply to write back an automated comment in the Pull Request:

- name: Comment PR

uses: thollander/actions-comment-pull-request@v2

with:

message: |

**${{ inputs.target }}** Preview deployed.

Valid until **${{ steps.expiration-ts.outputs.date-end }}**.

Deployment's name: **${{ steps.deployment-name.outputs.name }}**.

Internal address: **${{ steps.kubernetes-service.outputs.service-addr }}**

External address: **${{ steps.kubernetes-ingress.outputs.external-url }}**How to clean up ?

Well, a cron wouldn’t be a bad idea here. It could run every few hours and the following:

List all Kubernetes Deployment that has a specific label

Here we would check “preview”

Extract the expiration timestamp from the Deployment

Extract the unique name from the Deployment

Attempt to delete the following Kubernetes resources with the unique name found in the Deployment:

Deployment

Service

Ingress

What if you close or merge the PR before it expires, should you wait for the expiration to kick in ?

An improvement would be:

cURL the Github API for the Pull Request like we did before

check the state of the Pull Request, if “closed” then delete the resources the same way above.

Thanks for reading this whole piece, it was long though I hope it helped.